Compare commits

merge into: jmg:main

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/1.0b

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/MegaIng-fix-818

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/antlr

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/embedded_discard

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/end_symbol_2021

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/inline

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/master

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/priority_decay

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/tree_templates

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/v0.12.0

jmg:main

pull from: jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/embedded_discard

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/1.0b

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/MegaIng-fix-818

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/antlr

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/embedded_discard

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/end_symbol_2021

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/inline

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/master

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/priority_decay

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/tree_templates

jmg:gm/2021-09-23T00Z/github.com--lark-parser-lark/v0.12.0

jmg:main

No commits in common. 'main' and 'gm/2021-09-23T00Z/github.com--lark-parser-lark/embedded_discard' have entirely different histories.

No commits in common. 'main' and 'gm/2021-09-23T00Z/github.com--lark-parser-lark/embedded_discard' have entirely different histories.

100 changed files with 12178 additions and 74 deletions

Split View

Diff Options

-

+11 -1.gitignore

-

+3 -0.gitmodules

-

+13 -0.travis.yml

-

+19 -0LICENSE

-

+1 -0MANIFEST.in

-

+183 -23README.md

-

+227 -0docs/classes.md

-

BINdocs/comparison_memory.png

-

BINdocs/comparison_runtime.png

-

+32 -0docs/features.md

-

+172 -0docs/grammar.md

-

+63 -0docs/how_to_develop.md

-

+46 -0docs/how_to_use.md

-

+51 -0docs/index.md

-

+444 -0docs/json_tutorial.md

-

BINdocs/lark_cheatsheet.pdf

-

+49 -0docs/parsers.md

-

+63 -0docs/philosophy.md

-

+76 -0docs/recipes.md

-

+147 -0docs/tree_construction.md

-

+0 -13doupdate.sh

-

+33 -0examples/README.md

-

+0 -0examples/__init__.py

-

+75 -0examples/calc.py

-

+42 -0examples/conf_earley.py

-

+38 -0examples/conf_lalr.py

-

+56 -0examples/custom_lexer.py

-

+76 -0examples/error_reporting_lalr.py

-

BINexamples/fruitflies.png

-

+49 -0examples/fruitflies.py

-

+52 -0examples/indented_tree.py

-

+81 -0examples/json_parser.py

-

+50 -0examples/lark.lark

-

+21 -0examples/lark_grammar.py

-

+168 -0examples/python2.lark

-

+187 -0examples/python3.lark

-

+82 -0examples/python_parser.py

-

+201 -0examples/qscintilla_json.py

-

+52 -0examples/reconstruct_json.py

-

+1 -0examples/relative-imports/multiple2.lark

-

+5 -0examples/relative-imports/multiple3.lark

-

+5 -0examples/relative-imports/multiples.lark

-

+28 -0examples/relative-imports/multiples.py

-

+1 -0examples/standalone/create_standalone.sh

-

+21 -0examples/standalone/json.lark

-

+1838 -0examples/standalone/json_parser.py

-

+25 -0examples/standalone/json_parser_main.py

-

+85 -0examples/turtle_dsl.py

-

+8 -0lark/__init__.py

-

+28 -0lark/common.py

-

+98 -0lark/exceptions.py

-

+104 -0lark/grammar.py

-

+50 -0lark/grammars/common.lark

-

+61 -0lark/indenter.py

-

+308 -0lark/lark.py

-

+375 -0lark/lexer.py

-

+860 -0lark/load_grammar.py

-

+266 -0lark/parse_tree_builder.py

-

+219 -0lark/parser_frontends.py

-

+0 -0lark/parsers/__init__.py

-

+345 -0lark/parsers/cyk.py

-

+322 -0lark/parsers/earley.py

-

+75 -0lark/parsers/earley_common.py

-

+430 -0lark/parsers/earley_forest.py

-

+155 -0lark/parsers/grammar_analysis.py

-

+136 -0lark/parsers/lalr_analysis.py

-

+107 -0lark/parsers/lalr_parser.py

-

+149 -0lark/parsers/xearley.py

-

+129 -0lark/reconstruct.py

-

+0 -0lark/tools/__init__.py

-

+190 -0lark/tools/nearley.py

-

+39 -0lark/tools/serialize.py

-

+137 -0lark/tools/standalone.py

-

+183 -0lark/tree.py

-

+241 -0lark/utils.py

-

+273 -0lark/visitors.py

-

+13 -0mkdocs.yml

-

+1 -0nearley-requirements.txt

-

+10 -0readthedocs.yml

-

+0 -35reponames.py

-

+0 -2repos.txt

-

+10 -0setup.cfg

-

+62 -0setup.py

-

+0 -0tests/__init__.py

-

+36 -0tests/__main__.py

-

+10 -0tests/grammars/ab.lark

-

+6 -0tests/grammars/leading_underscore_grammar.lark

-

+3 -0tests/grammars/test.lark

-

+4 -0tests/grammars/test_relative_import_of_nested_grammar.lark

-

+4 -0tests/grammars/test_relative_import_of_nested_grammar__grammar_to_import.lark

-

+1 -0tests/grammars/test_relative_import_of_nested_grammar__nested_grammar.lark

-

+7 -0tests/grammars/three_rules_using_same_token.lark

-

+0 -0tests/test_nearley/__init__.py

-

+3 -0tests/test_nearley/grammars/include_unicode.ne

-

+1 -0tests/test_nearley/grammars/unicode.ne

-

+1 -0tests/test_nearley/nearley

-

+105 -0tests/test_nearley/test_nearley.py

-

+1618 -0tests/test_parser.py

-

+116 -0tests/test_reconstructor.py

-

+7 -0tests/test_relative_import.lark

+ 11

- 1

.gitignore

View File

| @@ -1 +1,11 @@ | |||

| __pycache__ | |||

| *.pyc | |||

| *.pyo | |||

| /.tox | |||

| /lark_parser.egg-info/** | |||

| tags | |||

| .vscode | |||

| .idea | |||

| .ropeproject | |||

| .cache | |||

| /dist | |||

| /build | |||

+ 3

- 0

.gitmodules

View File

| @@ -0,0 +1,3 @@ | |||

| [submodule "tests/test_nearley/nearley"] | |||

| path = tests/test_nearley/nearley | |||

| url = https://github.com/Hardmath123/nearley | |||

+ 13

- 0

.travis.yml

View File

| @@ -0,0 +1,13 @@ | |||

| dist: xenial | |||

| language: python | |||

| python: | |||

| - "2.7" | |||

| - "3.4" | |||

| - "3.5" | |||

| - "3.6" | |||

| - "3.7" | |||

| - "pypy2.7-6.0" | |||

| - "pypy3.5-6.0" | |||

| install: pip install tox-travis | |||

| script: | |||

| - tox | |||

+ 19

- 0

LICENSE

View File

| @@ -0,0 +1,19 @@ | |||

| Copyright © 2017 Erez Shinan | |||

| Permission is hereby granted, free of charge, to any person obtaining a copy of | |||

| this software and associated documentation files (the "Software"), to deal in | |||

| the Software without restriction, including without limitation the rights to | |||

| use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of | |||

| the Software, and to permit persons to whom the Software is furnished to do so, | |||

| subject to the following conditions: | |||

| The above copyright notice and this permission notice shall be included in all | |||

| copies or substantial portions of the Software. | |||

| THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR | |||

| IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS | |||

| FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR | |||

| COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER | |||

| IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN | |||

| CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. | |||

+ 1

- 0

MANIFEST.in

View File

| @@ -0,0 +1 @@ | |||

| include README.md LICENSE docs/* examples/*.py examples/*.png examples/*.lark tests/*.py tests/*.lark tests/grammars/* tests/test_nearley/*.py tests/test_nearley/grammars/* | |||

+ 183

- 23

README.md

View File

| @@ -1,31 +1,191 @@ | |||

| GITMIRROR | |||

| ========= | |||

| # Lark - a modern parsing library for Python | |||

| This repo is a mirror of various repositories that I want to keep track of. | |||

| I realized that git, w/ it's inherently dedupability, and the ability to | |||

| store many trees in a single repo, that it'd be easy to create a repo that | |||

| regularly clones/mirrors other source repos. Not only this, but the | |||

| state of the tags and branches can be archived on a daily basis, | |||

| consuming very little space. | |||

| Parse any context-free grammar, FAST and EASY! | |||

| The main reason that I want this is from a supply chain availability | |||

| perspective. As a consumer of source, it isn't always guaranteed that | |||

| the project you depend upon will continue to exist in the future. It | |||

| could also be that older version are removed, etc. | |||

| **Beginners**: Lark is not just another parser. It can parse any grammar you throw at it, no matter how complicated or ambiguous, and do so efficiently. It also constructs a parse-tree for you, without additional code on your part. | |||

| **Experts**: Lark implements both Earley(SPPF) and LALR(1), and several different lexers, so you can trade-off power and speed, according to your requirements. It also provides a variety of sophisticated features and utilities. | |||

| Quick start | |||

| ----------- | |||

| Lark can: | |||

| 1. Update the file `repos.txt` with a list of urls that you want to mirror. | |||

| 2. Run the script `doupdate.sh` to mirror all the repos. | |||

| 3. Optionally run `git push --mirror origin` to store the data on the server. | |||

| - Parse all context-free grammars, and handle any ambiguity | |||

| - Build a parse-tree automagically, no construction code required | |||

| - Outperform all other Python libraries when using LALR(1) (Yes, including PLY) | |||

| - Run on every Python interpreter (it's pure-python) | |||

| - Generate a stand-alone parser (for LALR(1) grammars) | |||

| And many more features. Read ahead and find out. | |||

| Process | |||

| ------- | |||

| Most importantly, Lark will save you time and prevent you from getting parsing headaches. | |||

| 1. Repo will self update main to get latest repos/code to mirror. | |||

| 2. Fetch the repos to mirror into their respective date tagged tags/branches. | |||

| 3. Push the tags/branches to the parent. | |||

| 4. Repeat | |||

| ### Quick links | |||

| - [Documentation @readthedocs](https://lark-parser.readthedocs.io/) | |||

| - [Cheatsheet (PDF)](/docs/lark_cheatsheet.pdf) | |||

| - [Tutorial](/docs/json_tutorial.md) for writing a JSON parser. | |||

| - Blog post: [How to write a DSL with Lark](http://blog.erezsh.com/how-to-write-a-dsl-in-python-with-lark/) | |||

| - [Gitter chat](https://gitter.im/lark-parser/Lobby) | |||

| ### Install Lark | |||

| $ pip install lark-parser | |||

| Lark has no dependencies. | |||

| [](https://travis-ci.org/lark-parser/lark) | |||

| ### Syntax Highlighting (new) | |||

| Lark now provides syntax highlighting for its grammar files (\*.lark): | |||

| - [Sublime Text & TextMate](https://github.com/lark-parser/lark_syntax) | |||

| - [vscode](https://github.com/lark-parser/vscode-lark) | |||

| ### Hello World | |||

| Here is a little program to parse "Hello, World!" (Or any other similar phrase): | |||

| ```python | |||

| from lark import Lark | |||

| l = Lark('''start: WORD "," WORD "!" | |||

| %import common.WORD // imports from terminal library | |||

| %ignore " " // Disregard spaces in text | |||

| ''') | |||

| print( l.parse("Hello, World!") ) | |||

| ``` | |||

| And the output is: | |||

| ```python | |||

| Tree(start, [Token(WORD, 'Hello'), Token(WORD, 'World')]) | |||

| ``` | |||

| Notice punctuation doesn't appear in the resulting tree. It's automatically filtered away by Lark. | |||

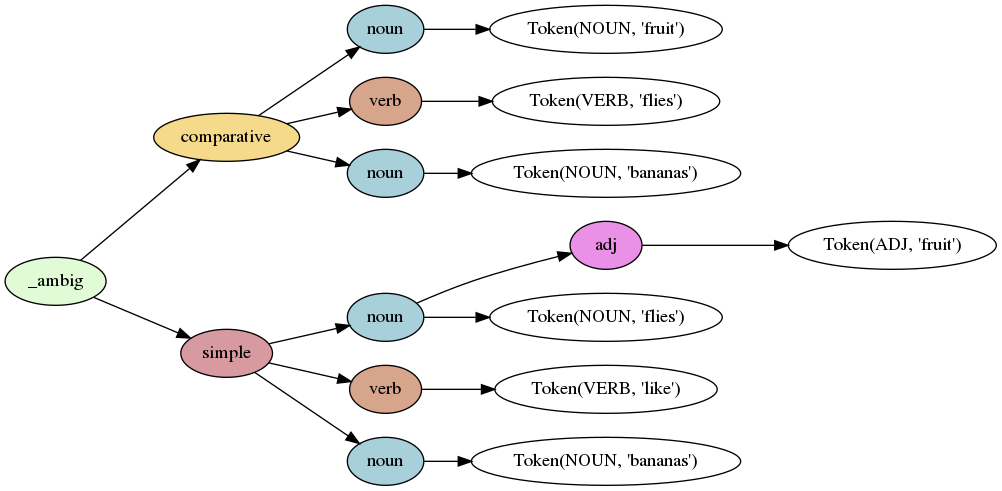

| ### Fruit flies like bananas | |||

| Lark is great at handling ambiguity. Let's parse the phrase "fruit flies like bananas": | |||

|  | |||

| See more [examples here](https://github.com/lark-parser/lark/tree/master/examples) | |||

| ## List of main features | |||

| - Builds a parse-tree (AST) automagically, based on the structure of the grammar | |||

| - **Earley** parser | |||

| - Can parse all context-free grammars | |||

| - Full support for ambiguous grammars | |||

| - **LALR(1)** parser | |||

| - Fast and light, competitive with PLY | |||

| - Can generate a stand-alone parser | |||

| - **CYK** parser, for highly ambiguous grammars (NEW! Courtesy of [ehudt](https://github.com/ehudt)) | |||

| - **EBNF** grammar | |||

| - **Unicode** fully supported | |||

| - **Python 2 & 3** compatible | |||

| - Automatic line & column tracking | |||

| - Standard library of terminals (strings, numbers, names, etc.) | |||

| - Import grammars from Nearley.js | |||

| - Extensive test suite [](https://codecov.io/gh/erezsh/lark) | |||

| - And much more! | |||

| See the full list of [features here](https://lark-parser.readthedocs.io/en/latest/features/) | |||

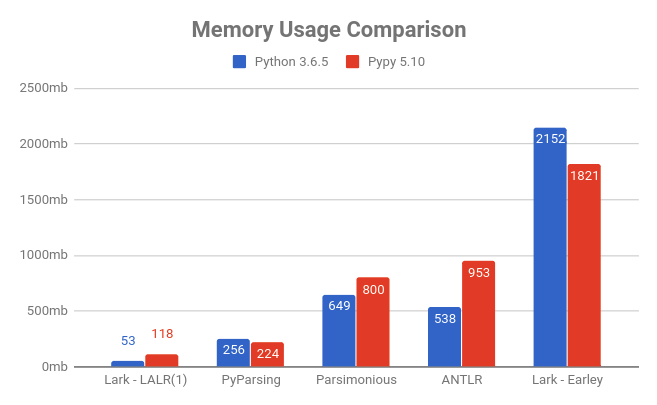

| ### Comparison to other libraries | |||

| #### Performance comparison | |||

| Lark is the fastest and lightest (lower is better) | |||

|  | |||

|  | |||

| Check out the [JSON tutorial](/docs/json_tutorial.md#conclusion) for more details on how the comparison was made. | |||

| *Note: I really wanted to add PLY to the benchmark, but I couldn't find a working JSON parser anywhere written in PLY. If anyone can point me to one that actually works, I would be happy to add it!* | |||

| *Note 2: The parsimonious code has been optimized for this specific test, unlike the other benchmarks (Lark included). Its "real-world" performance may not be as good.* | |||

| #### Feature comparison | |||

| | Library | Algorithm | Grammar | Builds tree? | Supports ambiguity? | Can handle every CFG? | Line/Column tracking | Generates Stand-alone | |||

| |:--------|:----------|:----|:--------|:------------|:------------|:----------|:---------- | |||

| | **Lark** | Earley/LALR(1) | EBNF | Yes! | Yes! | Yes! | Yes! | Yes! (LALR only) | | |||

| | [PLY](http://www.dabeaz.com/ply/) | LALR(1) | BNF | No | No | No | No | No | | |||

| | [PyParsing](http://pyparsing.wikispaces.com/) | PEG | Combinators | No | No | No\* | No | No | | |||

| | [Parsley](https://pypi.python.org/pypi/Parsley) | PEG | EBNF | No | No | No\* | No | No | | |||

| | [Parsimonious](https://github.com/erikrose/parsimonious) | PEG | EBNF | Yes | No | No\* | No | No | | |||

| | [ANTLR](https://github.com/antlr/antlr4) | LL(*) | EBNF | Yes | No | Yes? | Yes | No | | |||

| (\* *PEGs cannot handle non-deterministic grammars. Also, according to Wikipedia, it remains unanswered whether PEGs can really parse all deterministic CFGs*) | |||

| ### Projects using Lark | |||

| - [storyscript](https://github.com/storyscript/storyscript) - The programming language for Application Storytelling | |||

| - [tartiflette](https://github.com/dailymotion/tartiflette) - a GraphQL engine by Dailymotion. Lark is used to parse the GraphQL schemas definitions. | |||

| - [Hypothesis](https://github.com/HypothesisWorks/hypothesis) - Library for property-based testing | |||

| - [mappyfile](https://github.com/geographika/mappyfile) - a MapFile parser for working with MapServer configuration | |||

| - [synapse](https://github.com/vertexproject/synapse) - an intelligence analysis platform | |||

| - [Command-Block-Assembly](https://github.com/simon816/Command-Block-Assembly) - An assembly language, and C compiler, for Minecraft commands | |||

| - [SPFlow](https://github.com/SPFlow/SPFlow) - Library for Sum-Product Networks | |||

| - [Torchani](https://github.com/aiqm/torchani) - Accurate Neural Network Potential on PyTorch | |||

| - [required](https://github.com/shezadkhan137/required) - multi-field validation using docstrings | |||

| - [miniwdl](https://github.com/chanzuckerberg/miniwdl) - A static analysis toolkit for the Workflow Description Language | |||

| - [pytreeview](https://gitlab.com/parmenti/pytreeview) - a lightweight tree-based grammar explorer | |||

| Using Lark? Send me a message and I'll add your project! | |||

| ### How to use Nearley grammars in Lark | |||

| Lark comes with a tool to convert grammars from [Nearley](https://github.com/Hardmath123/nearley), a popular Earley library for Javascript. It uses [Js2Py](https://github.com/PiotrDabkowski/Js2Py) to convert and run the Javascript postprocessing code segments. | |||

| Here's an example: | |||

| ```bash | |||

| git clone https://github.com/Hardmath123/nearley | |||

| python -m lark.tools.nearley nearley/examples/calculator/arithmetic.ne main nearley > ncalc.py | |||

| ``` | |||

| You can use the output as a regular python module: | |||

| ```python | |||

| >>> import ncalc | |||

| >>> ncalc.parse('sin(pi/4) ^ e') | |||

| 0.38981434460254655 | |||

| ``` | |||

| ## License | |||

| Lark uses the [MIT license](LICENSE). | |||

| (The standalone tool is under GPL2) | |||

| ## Contribute | |||

| Lark is currently accepting pull-requests. See [How to develop Lark](/docs/how_to_develop.md) | |||

| ## Donate | |||

| If you like Lark and feel like donating, you can do so at my [patreon page](https://www.patreon.com/erezsh). | |||

| If you wish for a specific feature to get a higher priority, you can request it in a follow-up email, and I'll consider it favorably. | |||

| ## Contact | |||

| If you have any questions or want my assistance, you can email me at erezshin at gmail com. | |||

| I'm also available for contract work. | |||

| -- [Erez](https://github.com/erezsh) | |||

+ 227

- 0

docs/classes.md

View File

| @@ -0,0 +1,227 @@ | |||

| # Classes - Reference | |||

| This page details the important classes in Lark. | |||

| ---- | |||

| ## Lark | |||

| The Lark class is the main interface for the library. It's mostly a thin wrapper for the many different parsers, and for the tree constructor. | |||

| ### Methods | |||

| #### \_\_init\_\_(self, grammar, **options) | |||

| The Lark class accepts a grammar string or file object, and keyword options: | |||

| * start - The symbol in the grammar that begins the parse (Default: `"start"`) | |||

| * parser - Decides which parser engine to use, "earley", "lalr" or "cyk". (Default: `"earley"`) | |||

| * lexer - Overrides default lexer. | |||

| * transformer - Applies the transformer instead of building a parse tree (only allowed with parser="lalr") | |||

| * postlex - Lexer post-processing (Default: None. only works when lexer is "standard" or "contextual") | |||

| * ambiguity (only relevant for earley and cyk) | |||

| * "explicit" - Return all derivations inside an "_ambig" data node. | |||

| * "resolve" - Let the parser choose the best derivation (greedy for tokens, non-greedy for rules. Default) | |||

| * debug - Display warnings (such as Shift-Reduce warnings for LALR) | |||

| * keep_all_tokens - Don't throw away any terminals from the tree (Default=False) | |||

| * propagate_positions - Propagate line/column count to tree nodes (default=False) | |||

| * lexer_callbacks - A dictionary of callbacks of type f(Token) -> Token, used to interface with the lexer Token generation. Only works with the standard and contextual lexers. See [Recipes](recipes.md) for more information. | |||

| #### parse(self, text) | |||

| Return a complete parse tree for the text (of type Tree) | |||

| If a transformer is supplied to `__init__`, returns whatever is the result of the transformation. | |||

| ---- | |||

| ## Tree | |||

| The main tree class | |||

| ### Properties | |||

| * `data` - The name of the rule or alias | |||

| * `children` - List of matched sub-rules and terminals | |||

| * `meta` - Line & Column numbers, if using `propagate_positions` | |||

| ### Methods | |||

| #### \_\_init\_\_(self, data, children) | |||

| Creates a new tree, and stores "data" and "children" in attributes of the same name. | |||

| #### pretty(self, indent_str=' ') | |||

| Returns an indented string representation of the tree. Great for debugging. | |||

| #### find_pred(self, pred) | |||

| Returns all nodes of the tree that evaluate pred(node) as true. | |||

| #### find_data(self, data) | |||

| Returns all nodes of the tree whose data equals the given data. | |||

| #### iter_subtrees(self) | |||

| Depth-first iteration. | |||

| Iterates over all the subtrees, never returning to the same node twice (Lark's parse-tree is actually a DAG). | |||

| #### iter_subtrees_topdown(self) | |||

| Breadth-first iteration. | |||

| Iterates over all the subtrees, return nodes in order like pretty() does. | |||

| #### \_\_eq\_\_, \_\_hash\_\_ | |||

| Trees can be hashed and compared. | |||

| ---- | |||

| ## Transformers & Visitors | |||

| Transformers & Visitors provide a convenient interface to process the parse-trees that Lark returns. | |||

| They are used by inheriting from the correct class (visitor or transformer), and implementing methods corresponding to the rule you wish to process. Each methods accepts the children as an argument. That can be modified using the `v-args` decorator, which allows to inline the arguments (akin to `*args`), or add the tree `meta` property as an argument. | |||

| See: https://github.com/lark-parser/lark/blob/master/lark/visitors.py | |||

| ### Visitors | |||

| Visitors visit each node of the tree, and run the appropriate method on it according to the node's data. | |||

| They work bottom-up, starting with the leaves and ending at the root of the tree. | |||

| **Example** | |||

| ```python | |||

| class IncreaseAllNumbers(Visitor): | |||

| def number(self, tree): | |||

| assert tree.data == "number" | |||

| tree.children[0] += 1 | |||

| IncreaseAllNumbers().visit(parse_tree) | |||

| ``` | |||

| There are two classes that implement the visitor interface: | |||

| * Visitor - Visit every node (without recursion) | |||

| * Visitor_Recursive - Visit every node using recursion. Slightly faster. | |||

| ### Transformers | |||

| Transformers visit each node of the tree, and run the appropriate method on it according to the node's data. | |||

| They work bottom-up (or: depth-first), starting with the leaves and ending at the root of the tree. | |||

| Transformers can be used to implement map & reduce patterns. | |||

| Because nodes are reduced from leaf to root, at any point the callbacks may assume the children have already been transformed (if applicable). | |||

| Transformers can be chained into a new transformer by using multiplication. | |||

| **Example:** | |||

| ```python | |||

| from lark import Tree, Transformer | |||

| class EvalExpressions(Transformer): | |||

| def expr(self, args): | |||

| return eval(args[0]) | |||

| t = Tree('a', [Tree('expr', ['1+2'])]) | |||

| print(EvalExpressions().transform( t )) | |||

| # Prints: Tree(a, [3]) | |||

| ``` | |||

| Here are the classes that implement the transformer interface: | |||

| - Transformer - Recursively transforms the tree. This is the one you probably want. | |||

| - Transformer_InPlace - Non-recursive. Changes the tree in-place instead of returning new instances | |||

| - Transformer_InPlaceRecursive - Recursive. Changes the tree in-place instead of returning new instances | |||

| ### v_args | |||

| `v_args` is a decorator. | |||

| By default, callback methods of transformers/visitors accept one argument: a list of the node's children. `v_args` can modify this behavior. | |||

| When used on a transformer/visitor class definition, it applies to all the callback methods inside it. | |||

| `v_args` accepts one of three flags: | |||

| - `inline` - Children are provided as `*args` instead of a list argument (not recommended for very long lists). | |||

| - `meta` - Provides two arguments: `children` and `meta` (instead of just the first) | |||

| - `tree` - Provides the entire tree as the argument, instead of the children. | |||

| Examples: | |||

| ```python | |||

| @v_args(inline=True) | |||

| class SolveArith(Transformer): | |||

| def add(self, left, right): | |||

| return left + right | |||

| class ReverseNotation(Transformer_InPlace): | |||

| @v_args(tree=True): | |||

| def tree_node(self, tree): | |||

| tree.children = tree.children[::-1] | |||

| ``` | |||

| ### Discard | |||

| When raising the `Discard` exception in a transformer callback, that node is discarded and won't appear in the parent. | |||

| ## Token | |||

| When using a lexer, the resulting tokens in the trees will be of the Token class, which inherits from Python's string. So, normal string comparisons and operations will work as expected. Tokens also have other useful attributes: | |||

| * `type` - Name of the token (as specified in grammar). | |||

| * `pos_in_stream` - the index of the token in the text | |||

| * `line` - The line of the token in the text (starting with 1) | |||

| * `column` - The column of the token in the text (starting with 1) | |||

| * `end_line` - The line where the token ends | |||

| * `end_column` - The next column after the end of the token. For example, if the token is a single character with a `column` value of 4, `end_column` will be 5. | |||

| ## UnexpectedInput | |||

| - `UnexpectedInput` | |||

| - `UnexpectedToken` - The parser recieved an unexpected token | |||

| - `UnexpectedCharacters` - The lexer encountered an unexpected string | |||

| After catching one of these exceptions, you may call the following helper methods to create a nicer error message: | |||

| ### Methods | |||

| #### get_context(text, span) | |||

| Returns a pretty string pinpointing the error in the text, with `span` amount of context characters around it. | |||

| (The parser doesn't hold a copy of the text it has to parse, so you have to provide it again) | |||

| #### match_examples(parse_fn, examples) | |||

| Allows you to detect what's wrong in the input text by matching against example errors. | |||

| Accepts the parse function (usually `lark_instance.parse`) and a dictionary of `{'example_string': value}`. | |||

| The function will iterate the dictionary until it finds a matching error, and return the corresponding value. | |||

| For an example usage, see: [examples/error_reporting_lalr.py](https://github.com/lark-parser/lark/blob/master/examples/error_reporting_lalr.py) | |||

BIN

docs/comparison_memory.png

View File

BIN

docs/comparison_runtime.png

View File

+ 32

- 0

docs/features.md

View File

| @@ -0,0 +1,32 @@ | |||

| # Main Features | |||

| - Earley parser, capable of parsing any context-free grammar | |||

| - Implements SPPF, for efficient parsing and storing of ambiguous grammars. | |||

| - LALR(1) parser, limited in power of expression, but very efficient in space and performance (O(n)). | |||

| - Implements a parse-aware lexer that provides a better power of expression than traditional LALR implementations (such as ply). | |||

| - EBNF-inspired grammar, with extra features (See: [Grammar Reference](grammar.md)) | |||

| - Builds a parse-tree (AST) automagically based on the grammar | |||

| - Stand-alone parser generator - create a small independent parser to embed in your project. | |||

| - Automatic line & column tracking | |||

| - Automatic terminal collision resolution | |||

| - Standard library of terminals (strings, numbers, names, etc.) | |||

| - Unicode fully supported | |||

| - Extensive test suite | |||

| - Python 2 & Python 3 compatible | |||

| - Pure-Python implementation | |||

| [Read more about the parsers](parsers.md) | |||

| # Extra features | |||

| - Import rules and tokens from other Lark grammars, for code reuse and modularity. | |||

| - Import grammars from Nearley.js | |||

| - CYK parser | |||

| ### Experimental features | |||

| - Automatic reconstruction of input from parse-tree (see examples) | |||

| ### Planned features (not implemented yet) | |||

| - Generate code in other languages than Python | |||

| - Grammar composition | |||

| - LALR(k) parser | |||

| - Full regexp-collision support using NFAs | |||

+ 172

- 0

docs/grammar.md

View File

| @@ -0,0 +1,172 @@ | |||

| # Grammar Reference | |||

| ## Definitions | |||

| **A grammar** is a list of rules and terminals, that together define a language. | |||

| Terminals define the alphabet of the language, while rules define its structure. | |||

| In Lark, a terminal may be a string, a regular expression, or a concatenation of these and other terminals. | |||

| Each rule is a list of terminals and rules, whose location and nesting define the structure of the resulting parse-tree. | |||

| A **parsing algorithm** is an algorithm that takes a grammar definition and a sequence of symbols (members of the alphabet), and matches the entirety of the sequence by searching for a structure that is allowed by the grammar. | |||

| ## General Syntax and notes | |||

| Grammars in Lark are based on [EBNF](https://en.wikipedia.org/wiki/Extended_Backus–Naur_form) syntax, with several enhancements. | |||

| Lark grammars are composed of a list of definitions and directives, each on its own line. A definition is either a named rule, or a named terminal. | |||

| **Comments** start with `//` and last to the end of the line (C++ style) | |||

| Lark begins the parse with the rule 'start', unless specified otherwise in the options. | |||

| Names of rules are always in lowercase, while names of terminals are always in uppercase. This distinction has practical effects, for the shape of the generated parse-tree, and the automatic construction of the lexer (aka tokenizer, or scanner). | |||

| ## Terminals | |||

| Terminals are used to match text into symbols. They can be defined as a combination of literals and other terminals. | |||

| **Syntax:** | |||

| ```html | |||

| <NAME> [. <priority>] : <literals-and-or-terminals> | |||

| ``` | |||

| Terminal names must be uppercase. | |||

| Literals can be one of: | |||

| * `"string"` | |||

| * `/regular expression+/` | |||

| * `"case-insensitive string"i` | |||

| * `/re with flags/imulx` | |||

| * Literal range: `"a".."z"`, `"1".."9"`, etc. | |||

| ### Priority | |||

| Terminals can be assigned priority only when using a lexer (future versions may support Earley's dynamic lexing). | |||

| Priority can be either positive or negative. In not specified for a terminal, it's assumed to be 1 (i.e. the default). | |||

| #### Notes for when using a lexer: | |||

| When using a lexer (standard or contextual), it is the grammar-author's responsibility to make sure the literals don't collide, or that if they do, they are matched in the desired order. Literals are matched in an order according to the following criteria: | |||

| 1. Highest priority first (priority is specified as: TERM.number: ...) | |||

| 2. Length of match (for regexps, the longest theoretical match is used) | |||

| 3. Length of literal / pattern definition | |||

| 4. Name | |||

| **Examples:** | |||

| ```perl | |||

| IF: "if" | |||

| INTEGER : /[0-9]+/ | |||

| INTEGER2 : ("0".."9")+ //# Same as INTEGER | |||

| DECIMAL.2: INTEGER "." INTEGER //# Will be matched before INTEGER | |||

| WHITESPACE: (" " | /\t/ )+ | |||

| SQL_SELECT: "select"i | |||

| ``` | |||

| ## Rules | |||

| **Syntax:** | |||

| ```html | |||

| <name> : <items-to-match> [-> <alias> ] | |||

| | ... | |||

| ``` | |||

| Names of rules and aliases are always in lowercase. | |||

| Rule definitions can be extended to the next line by using the OR operator (signified by a pipe: `|` ). | |||

| An alias is a name for the specific rule alternative. It affects tree construction. | |||

| Each item is one of: | |||

| * `rule` | |||

| * `TERMINAL` | |||

| * `"string literal"` or `/regexp literal/` | |||

| * `(item item ..)` - Group items | |||

| * `[item item ..]` - Maybe. Same as `(item item ..)?` | |||

| * `item?` - Zero or one instances of item ("maybe") | |||

| * `item*` - Zero or more instances of item | |||

| * `item+` - One or more instances of item | |||

| * `item ~ n` - Exactly *n* instances of item | |||

| * `item ~ n..m` - Between *n* to *m* instances of item (not recommended for wide ranges, due to performance issues) | |||

| **Examples:** | |||

| ```perl | |||

| hello_world: "hello" "world" | |||

| mul: [mul "*"] number //# Left-recursion is allowed! | |||

| expr: expr operator expr | |||

| | value //# Multi-line, belongs to expr | |||

| four_words: word ~ 4 | |||

| ``` | |||

| ### Priority | |||

| Rules can be assigned priority only when using Earley (future versions may support LALR as well). | |||

| Priority can be either positive or negative. In not specified for a terminal, it's assumed to be 1 (i.e. the default). | |||

| ## Directives | |||

| ### %ignore | |||

| All occurrences of the terminal will be ignored, and won't be part of the parse. | |||

| Using the `%ignore` directive results in a cleaner grammar. | |||

| It's especially important for the LALR(1) algorithm, because adding whitespace (or comments, or other extranous elements) explicitly in the grammar, harms its predictive abilities, which are based on a lookahead of 1. | |||

| **Syntax:** | |||

| ```html | |||

| %ignore <TERMINAL> | |||

| ``` | |||

| **Examples:** | |||

| ```perl | |||

| %ignore " " | |||

| COMMENT: "#" /[^\n]/* | |||

| %ignore COMMENT | |||

| ``` | |||

| ### %import | |||

| Allows to import terminals and rules from lark grammars. | |||

| When importing rules, all their dependencies will be imported into a namespace, to avoid collisions. It's not possible to override their dependencies (e.g. like you would when inheriting a class). | |||

| **Syntax:** | |||

| ```html | |||

| %import <module>.<TERMINAL> | |||

| %import <module>.<rule> | |||

| %import <module>.<TERMINAL> -> <NEWTERMINAL> | |||

| %import <module>.<rule> -> <newrule> | |||

| %import <module> (<TERM1> <TERM2> <rule1> <rule2>) | |||

| ``` | |||

| If the module path is absolute, Lark will attempt to load it from the built-in directory (currently, only `common.lark` is available). | |||

| If the module path is relative, such as `.path.to.file`, Lark will attempt to load it from the current working directory. Grammars must have the `.lark` extension. | |||

| The rule or terminal can be imported under an other name with the `->` syntax. | |||

| **Example:** | |||

| ```perl | |||

| %import common.NUMBER | |||

| %import .terminals_file (A B C) | |||

| %import .rules_file.rulea -> ruleb | |||

| ``` | |||

| Note that `%ignore` directives cannot be imported. Imported rules will abide by the `%ignore` directives declared in the main grammar. | |||

| ### %declare | |||

| Declare a terminal without defining it. Useful for plugins. | |||

+ 63

- 0

docs/how_to_develop.md

View File

| @@ -0,0 +1,63 @@ | |||

| # How to develop Lark - Guide | |||

| There are many ways you can help the project: | |||

| * Help solve issues | |||

| * Improve the documentation | |||

| * Write new grammars for Lark's library | |||

| * Write a blog post introducing Lark to your audience | |||

| * Port Lark to another language | |||

| * Help me with code developemnt | |||

| If you're interested in taking one of these on, let me know and I will provide more details and assist you in the process. | |||

| ## Unit Tests | |||

| Lark comes with an extensive set of tests. Many of the tests will run several times, once for each parser configuration. | |||

| To run the tests, just go to the lark project root, and run the command: | |||

| ```bash | |||

| python -m tests | |||

| ``` | |||

| or | |||

| ```bash | |||

| pypy -m tests | |||

| ``` | |||

| For a list of supported interpreters, you can consult the `tox.ini` file. | |||

| You can also run a single unittest using its class and method name, for example: | |||

| ```bash | |||

| ## test_package test_class_name.test_function_name | |||

| python -m tests TestLalrStandard.test_lexer_error_recovering | |||

| ``` | |||

| ### tox | |||

| To run all Unit Tests with tox, | |||

| install tox and Python 2.7 up to the latest python interpreter supported (consult the file tox.ini). | |||

| Then, | |||

| run the command `tox` on the root of this project (where the main setup.py file is on). | |||

| And, for example, | |||

| if you would like to only run the Unit Tests for Python version 2.7, | |||

| you can run the command `tox -e py27` | |||

| ### pytest | |||

| You can also run the tests using pytest: | |||

| ```bash | |||

| pytest tests | |||

| ``` | |||

| ### Using setup.py | |||

| Another way to run the tests is using setup.py: | |||

| ```bash | |||

| python setup.py test | |||

| ``` | |||

+ 46

- 0

docs/how_to_use.md

View File

| @@ -0,0 +1,46 @@ | |||

| # How To Use Lark - Guide | |||

| ## Work process | |||

| This is the recommended process for working with Lark: | |||

| 1. Collect or create input samples, that demonstrate key features or behaviors in the language you're trying to parse. | |||

| 2. Write a grammar. Try to aim for a structure that is intuitive, and in a way that imitates how you would explain your language to a fellow human. | |||

| 3. Try your grammar in Lark against each input sample. Make sure the resulting parse-trees make sense. | |||

| 4. Use Lark's grammar features to [shape the tree](tree_construction.md): Get rid of superfluous rules by inlining them, and use aliases when specific cases need clarification. | |||

| - You can perform steps 1-4 repeatedly, gradually growing your grammar to include more sentences. | |||

| 5. Create a transformer to evaluate the parse-tree into a structure you'll be comfortable to work with. This may include evaluating literals, merging branches, or even converting the entire tree into your own set of AST classes. | |||

| Of course, some specific use-cases may deviate from this process. Feel free to suggest these cases, and I'll add them to this page. | |||

| ## Getting started | |||

| Browse the [Examples](https://github.com/lark-parser/lark/tree/master/examples) to find a template that suits your purposes. | |||

| Read the tutorials to get a better understanding of how everything works. (links in the [main page](/)) | |||

| Use the [Cheatsheet (PDF)](lark_cheatsheet.pdf) for quick reference. | |||

| Use the reference pages for more in-depth explanations. (links in the [main page](/)] | |||

| ## LALR usage | |||

| By default Lark silently resolves Shift/Reduce conflicts as Shift. To enable warnings pass `debug=True`. To get the messages printed you have to configure `logging` framework beforehand. For example: | |||

| ```python | |||

| from lark import Lark | |||

| import logging | |||

| logging.basicConfig(level=logging.DEBUG) | |||

| collision_grammar = ''' | |||

| start: as as | |||

| as: a* | |||

| a: "a" | |||

| ''' | |||

| p = Lark(collision_grammar, parser='lalr', debug=True) | |||

| ``` | |||

+ 51

- 0

docs/index.md

View File

| @@ -0,0 +1,51 @@ | |||

| # Lark | |||

| A modern parsing library for Python | |||

| ## Overview | |||

| Lark can parse any context-free grammar. | |||

| Lark provides: | |||

| - Advanced grammar language, based on EBNF | |||

| - Three parsing algorithms to choose from: Earley, LALR(1) and CYK | |||

| - Automatic tree construction, inferred from your grammar | |||

| - Fast unicode lexer with regexp support, and automatic line-counting | |||

| Lark's code is hosted on Github: [https://github.com/lark-parser/lark](https://github.com/lark-parser/lark) | |||

| ### Install | |||

| ```bash | |||

| $ pip install lark-parser | |||

| ``` | |||

| #### Syntax Highlighting | |||

| - [Sublime Text & TextMate](https://github.com/lark-parser/lark_syntax) | |||

| - [Visual Studio Code](https://github.com/lark-parser/vscode-lark) (Or install through the vscode plugin system) | |||

| ----- | |||

| ## Documentation Index | |||

| * [Philosophy & Design Choices](philosophy.md) | |||

| * [Full List of Features](features.md) | |||

| * [Examples](https://github.com/lark-parser/lark/tree/master/examples) | |||

| * Tutorials | |||

| * [How to write a DSL](http://blog.erezsh.com/how-to-write-a-dsl-in-python-with-lark/) - Implements a toy LOGO-like language with an interpreter | |||

| * [How to write a JSON parser](json_tutorial.md) | |||

| * External | |||

| * [Program Synthesis is Possible](https://www.cs.cornell.edu/~asampson/blog/minisynth.html) - Creates a DSL for Z3 | |||

| * Guides | |||

| * [How to use Lark](how_to_use.md) | |||

| * [How to develop Lark](how_to_develop.md) | |||

| * Reference | |||

| * [Grammar](grammar.md) | |||

| * [Tree Construction](tree_construction.md) | |||

| * [Classes](classes.md) | |||

| * [Cheatsheet (PDF)](lark_cheatsheet.pdf) | |||

| * Discussion | |||

| * [Gitter](https://gitter.im/lark-parser/Lobby) | |||

| * [Forum (Google Groups)](https://groups.google.com/forum/#!forum/lark-parser) | |||

+ 444

- 0

docs/json_tutorial.md

View File

| @@ -0,0 +1,444 @@ | |||

| # Lark Tutorial - JSON parser | |||

| Lark is a parser - a program that accepts a grammar and text, and produces a structured tree that represents that text. | |||

| In this tutorial we will write a JSON parser in Lark, and explore Lark's various features in the process. | |||

| It has 5 parts. | |||

| 1. Writing the grammar | |||

| 2. Creating the parser | |||

| 3. Shaping the tree | |||

| 4. Evaluating the tree | |||

| 5. Optimizing | |||

| Knowledge assumed: | |||

| - Using Python | |||

| - A basic understanding of how to use regular expressions | |||

| ## Part 1 - The Grammar | |||

| Lark accepts its grammars in a format called [EBNF](https://www.wikiwand.com/en/Extended_Backus%E2%80%93Naur_form). It basically looks like this: | |||

| rule_name : list of rules and TERMINALS to match | |||

| | another possible list of items | |||

| | etc. | |||

| TERMINAL: "some text to match" | |||

| (*a terminal is a string or a regular expression*) | |||

| The parser will try to match each rule (left-part) by matching its items (right-part) sequentially, trying each alternative (In practice, the parser is predictive so we don't have to try every alternative). | |||

| How to structure those rules is beyond the scope of this tutorial, but often it's enough to follow one's intuition. | |||

| In the case of JSON, the structure is simple: A json document is either a list, or a dictionary, or a string/number/etc. | |||

| The dictionaries and lists are recursive, and contain other json documents (or "values"). | |||

| Let's write this structure in EBNF form: | |||

| value: dict | |||

| | list | |||

| | STRING | |||

| | NUMBER | |||

| | "true" | "false" | "null" | |||

| list : "[" [value ("," value)*] "]" | |||

| dict : "{" [pair ("," pair)*] "}" | |||

| pair : STRING ":" value | |||

| A quick explanation of the syntax: | |||

| - Parenthesis let us group rules together. | |||

| - rule\* means *any amount*. That means, zero or more instances of that rule. | |||

| - [rule] means *optional*. That means zero or one instance of that rule. | |||

| Lark also supports the rule+ operator, meaning one or more instances. It also supports the rule? operator which is another way to say *optional*. | |||

| Of course, we still haven't defined "STRING" and "NUMBER". Luckily, both these literals are already defined in Lark's common library: | |||

| %import common.ESCAPED_STRING -> STRING | |||

| %import common.SIGNED_NUMBER -> NUMBER | |||

| The arrow (->) renames the terminals. But that only adds obscurity in this case, so going forward we'll just use their original names. | |||

| We'll also take care of the white-space, which is part of the text. | |||

| %import common.WS | |||

| %ignore WS | |||

| We tell our parser to ignore whitespace. Otherwise, we'd have to fill our grammar with WS terminals. | |||

| By the way, if you're curious what these terminals signify, they are roughly equivalent to this: | |||

| NUMBER : /-?\d+(\.\d+)?([eE][+-]?\d+)?/ | |||

| STRING : /".*?(?<!\\)"/ | |||

| %ignore /[ \t\n\f\r]+/ | |||

| Lark will accept this, if you really want to complicate your life :) | |||

| You can find the original definitions in [common.lark](/lark/grammars/common.lark). | |||

| They're don't strictly adhere to [json.org](https://json.org/) - but our purpose here is to accept json, not validate it. | |||

| Notice that terminals are written in UPPER-CASE, while rules are written in lower-case. | |||

| I'll touch more on the differences between rules and terminals later. | |||

| ## Part 2 - Creating the Parser | |||

| Once we have our grammar, creating the parser is very simple. | |||

| We simply instantiate Lark, and tell it to accept a "value": | |||

| ```python | |||

| from lark import Lark | |||

| json_parser = Lark(r""" | |||

| value: dict | |||

| | list | |||

| | ESCAPED_STRING | |||

| | SIGNED_NUMBER | |||

| | "true" | "false" | "null" | |||

| list : "[" [value ("," value)*] "]" | |||

| dict : "{" [pair ("," pair)*] "}" | |||

| pair : ESCAPED_STRING ":" value | |||

| %import common.ESCAPED_STRING | |||

| %import common.SIGNED_NUMBER | |||

| %import common.WS | |||

| %ignore WS | |||

| """, start='value') | |||

| ``` | |||

| It's that simple! Let's test it out: | |||

| ```python | |||

| >>> text = '{"key": ["item0", "item1", 3.14]}' | |||

| >>> json_parser.parse(text) | |||

| Tree(value, [Tree(dict, [Tree(pair, [Token(STRING, "key"), Tree(value, [Tree(list, [Tree(value, [Token(STRING, "item0")]), Tree(value, [Token(STRING, "item1")]), Tree(value, [Token(NUMBER, 3.14)])])])])])]) | |||

| >>> print( _.pretty() ) | |||

| value | |||

| dict | |||

| pair | |||

| "key" | |||

| value | |||

| list | |||

| value "item0" | |||

| value "item1" | |||

| value 3.14 | |||

| ``` | |||

| As promised, Lark automagically creates a tree that represents the parsed text. | |||

| But something is suspiciously missing from the tree. Where are the curly braces, the commas and all the other punctuation literals? | |||

| Lark automatically filters out literals from the tree, based on the following criteria: | |||

| - Filter out string literals without a name, or with a name that starts with an underscore. | |||

| - Keep regexps, even unnamed ones, unless their name starts with an underscore. | |||

| Unfortunately, this means that it will also filter out literals like "true" and "false", and we will lose that information. The next section, "Shaping the tree" deals with this issue, and others. | |||

| ## Part 3 - Shaping the Tree | |||

| We now have a parser that can create a parse tree (or: AST), but the tree has some issues: | |||

| 1. "true", "false" and "null" are filtered out (test it out yourself!) | |||

| 2. Is has useless branches, like *value*, that clutter-up our view. | |||

| I'll present the solution, and then explain it: | |||

| ?value: dict | |||

| | list | |||

| | string | |||

| | SIGNED_NUMBER -> number | |||

| | "true" -> true | |||

| | "false" -> false | |||

| | "null" -> null | |||

| ... | |||

| string : ESCAPED_STRING | |||

| 1. Those little arrows signify *aliases*. An alias is a name for a specific part of the rule. In this case, we will name the *true/false/null* matches, and this way we won't lose the information. We also alias *SIGNED_NUMBER* to mark it for later processing. | |||

| 2. The question-mark prefixing *value* ("?value") tells the tree-builder to inline this branch if it has only one member. In this case, *value* will always have only one member, and will always be inlined. | |||

| 3. We turned the *ESCAPED_STRING* terminal into a rule. This way it will appear in the tree as a branch. This is equivalent to aliasing (like we did for the number), but now *string* can also be used elsewhere in the grammar (namely, in the *pair* rule). | |||

| Here is the new grammar: | |||

| ```python | |||

| from lark import Lark | |||

| json_parser = Lark(r""" | |||

| ?value: dict | |||

| | list | |||

| | string | |||

| | SIGNED_NUMBER -> number | |||

| | "true" -> true | |||

| | "false" -> false | |||

| | "null" -> null | |||

| list : "[" [value ("," value)*] "]" | |||

| dict : "{" [pair ("," pair)*] "}" | |||

| pair : string ":" value | |||

| string : ESCAPED_STRING | |||

| %import common.ESCAPED_STRING | |||

| %import common.SIGNED_NUMBER | |||

| %import common.WS | |||

| %ignore WS | |||

| """, start='value') | |||

| ``` | |||

| And let's test it out: | |||

| ```python | |||

| >>> text = '{"key": ["item0", "item1", 3.14, true]}' | |||

| >>> print( json_parser.parse(text).pretty() ) | |||

| dict | |||

| pair | |||

| string "key" | |||

| list | |||

| string "item0" | |||

| string "item1" | |||

| number 3.14 | |||

| true | |||

| ``` | |||

| Ah! That is much much nicer. | |||

| ## Part 4 - Evaluating the tree | |||

| It's nice to have a tree, but what we really want is a JSON object. | |||

| The way to do it is to evaluate the tree, using a Transformer. | |||

| A transformer is a class with methods corresponding to branch names. For each branch, the appropriate method will be called with the children of the branch as its argument, and its return value will replace the branch in the tree. | |||

| So let's write a partial transformer, that handles lists and dictionaries: | |||

| ```python | |||

| from lark import Transformer | |||

| class MyTransformer(Transformer): | |||

| def list(self, items): | |||

| return list(items) | |||

| def pair(self, (k,v)): | |||

| return k, v | |||

| def dict(self, items): | |||

| return dict(items) | |||

| ``` | |||

| And when we run it, we get this: | |||

| ```python | |||

| >>> tree = json_parser.parse(text) | |||

| >>> MyTransformer().transform(tree) | |||

| {Tree(string, [Token(ANONRE_1, "key")]): [Tree(string, [Token(ANONRE_1, "item0")]), Tree(string, [Token(ANONRE_1, "item1")]), Tree(number, [Token(ANONRE_0, 3.14)]), Tree(true, [])]} | |||

| ``` | |||

| This is pretty close. Let's write a full transformer that can handle the terminals too. | |||

| Also, our definitions of list and dict are a bit verbose. We can do better: | |||

| ```python | |||

| from lark import Transformer | |||

| class TreeToJson(Transformer): | |||

| def string(self, (s,)): | |||

| return s[1:-1] | |||

| def number(self, (n,)): | |||

| return float(n) | |||

| list = list | |||

| pair = tuple | |||

| dict = dict | |||

| null = lambda self, _: None | |||

| true = lambda self, _: True | |||

| false = lambda self, _: False | |||

| ``` | |||

| And when we run it: | |||

| ```python | |||

| >>> tree = json_parser.parse(text) | |||

| >>> TreeToJson().transform(tree) | |||

| {u'key': [u'item0', u'item1', 3.14, True]} | |||

| ``` | |||

| Magic! | |||

| ## Part 5 - Optimizing | |||

| ### Step 1 - Benchmark | |||

| By now, we have a fully working JSON parser, that can accept a string of JSON, and return its Pythonic representation. | |||

| But how fast is it? | |||

| Now, of course there are JSON libraries for Python written in C, and we can never compete with them. But since this is applicable to any parser you would write in Lark, let's see how far we can take this. | |||

| The first step for optimizing is to have a benchmark. For this benchmark I'm going to take data from [json-generator.com/](http://www.json-generator.com/). I took their default suggestion and changed it to 5000 objects. The result is a 6.6MB sparse JSON file. | |||

| Our first program is going to be just a concatenation of everything we've done so far: | |||

| ```python | |||

| import sys | |||

| from lark import Lark, Transformer | |||

| json_grammar = r""" | |||

| ?value: dict | |||

| | list | |||

| | string | |||

| | SIGNED_NUMBER -> number | |||

| | "true" -> true | |||

| | "false" -> false | |||

| | "null" -> null | |||

| list : "[" [value ("," value)*] "]" | |||

| dict : "{" [pair ("," pair)*] "}" | |||

| pair : string ":" value | |||

| string : ESCAPED_STRING | |||

| %import common.ESCAPED_STRING | |||

| %import common.SIGNED_NUMBER | |||

| %import common.WS | |||

| %ignore WS | |||

| """ | |||

| class TreeToJson(Transformer): | |||

| def string(self, (s,)): | |||

| return s[1:-1] | |||

| def number(self, (n,)): | |||

| return float(n) | |||

| list = list | |||

| pair = tuple | |||

| dict = dict | |||

| null = lambda self, _: None | |||

| true = lambda self, _: True | |||

| false = lambda self, _: False | |||

| json_parser = Lark(json_grammar, start='value', lexer='standard') | |||

| if __name__ == '__main__': | |||

| with open(sys.argv[1]) as f: | |||

| tree = json_parser.parse(f.read()) | |||

| print(TreeToJson().transform(tree)) | |||

| ``` | |||

| We run it and get this: | |||

| $ time python tutorial_json.py json_data > /dev/null | |||

| real 0m36.257s | |||

| user 0m34.735s | |||

| sys 0m1.361s | |||

| That's unsatisfactory time for a 6MB file. Maybe if we were parsing configuration or a small DSL, but we're trying to handle large amount of data here. | |||

| Well, turns out there's quite a bit we can do about it! | |||

| ### Step 2 - LALR(1) | |||

| So far we've been using the Earley algorithm, which is the default in Lark. Earley is powerful but slow. But it just so happens that our grammar is LR-compatible, and specifically LALR(1) compatible. | |||

| So let's switch to LALR(1) and see what happens: | |||

| ```python | |||

| json_parser = Lark(json_grammar, start='value', parser='lalr') | |||

| ``` | |||

| $ time python tutorial_json.py json_data > /dev/null | |||

| real 0m7.554s | |||

| user 0m7.352s | |||

| sys 0m0.148s | |||

| Ah, that's much better. The resulting JSON is of course exactly the same. You can run it for yourself and see. | |||

| It's important to note that not all grammars are LR-compatible, and so you can't always switch to LALR(1). But there's no harm in trying! If Lark lets you build the grammar, it means you're good to go. | |||

| ### Step 3 - Tree-less LALR(1) | |||

| So far, we've built a full parse tree for our JSON, and then transformed it. It's a convenient method, but it's not the most efficient in terms of speed and memory. Luckily, Lark lets us avoid building the tree when parsing with LALR(1). | |||

| Here's the way to do it: | |||

| ```python | |||

| json_parser = Lark(json_grammar, start='value', parser='lalr', transformer=TreeToJson()) | |||

| if __name__ == '__main__': | |||

| with open(sys.argv[1]) as f: | |||

| print( json_parser.parse(f.read()) ) | |||

| ``` | |||

| We've used the transformer we've already written, but this time we plug it straight into the parser. Now it can avoid building the parse tree, and just send the data straight into our transformer. The *parse()* method now returns the transformed JSON, instead of a tree. | |||

| Let's benchmark it: | |||

| real 0m4.866s | |||

| user 0m4.722s | |||

| sys 0m0.121s | |||

| That's a measurable improvement! Also, this way is more memory efficient. Check out the benchmark table at the end to see just how much. | |||

| As a general practice, it's recommended to work with parse trees, and only skip the tree-builder when your transformer is already working. | |||

| ### Step 4 - PyPy | |||

| PyPy is a JIT engine for running Python, and it's designed to be a drop-in replacement. | |||

| Lark is written purely in Python, which makes it very suitable for PyPy. | |||

| Let's get some free performance: | |||

| $ time pypy tutorial_json.py json_data > /dev/null | |||

| real 0m1.397s | |||

| user 0m1.296s | |||

| sys 0m0.083s | |||

| PyPy is awesome! | |||

| ### Conclusion | |||

| We've brought the run-time down from 36 seconds to 1.1 seconds, in a series of small and simple steps. | |||

| Now let's compare the benchmarks in a nicely organized table. | |||

| I measured memory consumption using a little script called [memusg](https://gist.github.com/netj/526585) | |||

| | Code | CPython Time | PyPy Time | CPython Mem | PyPy Mem | |||

| |:-----|:-------------|:------------|:----------|:--------- | |||

| | Lark - Earley *(with lexer)* | 42s | 4s | 1167M | 608M | | |||

| | Lark - LALR(1) | 8s | 1.53s | 453M | 266M | | |||

| | Lark - LALR(1) tree-less | 4.76s | 1.23s | 70M | 134M | | |||

| | PyParsing ([Parser](http://pyparsing.wikispaces.com/file/view/jsonParser.py)) | 32s | 3.53s | 443M | 225M | | |||

| | funcparserlib ([Parser](https://github.com/vlasovskikh/funcparserlib/blob/master/funcparserlib/tests/json.py)) | 8.5s | 1.3s | 483M | 293M | | |||

| | Parsimonious ([Parser](https://gist.githubusercontent.com/reclosedev/5222560/raw/5e97cf7eb62c3a3671885ec170577285e891f7d5/parsimonious_json.py)) | ? | 5.7s | ? | 1545M | | |||

| I added a few other parsers for comparison. PyParsing and funcparselib fair pretty well in their memory usage (they don't build a tree), but they can't compete with the run-time speed of LALR(1). | |||

| These benchmarks are for Lark's alpha version. I already have several optimizations planned that will significantly improve run-time speed. | |||

| Once again, shout-out to PyPy for being so effective. | |||

| ## Afterword | |||

| This is the end of the tutorial. I hoped you liked it and learned a little about Lark. | |||

| To see what else you can do with Lark, check out the [examples](/examples). | |||

| For questions or any other subject, feel free to email me at erezshin at gmail dot com. | |||

BIN

docs/lark_cheatsheet.pdf

View File

+ 49

- 0

docs/parsers.md

View File

| @@ -0,0 +1,49 @@ | |||

| Lark implements the following parsing algorithms: Earley, LALR(1), and CYK | |||

| # Earley | |||

| An [Earley Parser](https://www.wikiwand.com/en/Earley_parser) is a chart parser capable of parsing any context-free grammar at O(n^3), and O(n^2) when the grammar is unambiguous. It can parse most LR grammars at O(n). Most programming languages are LR, and can be parsed at a linear time. | |||

| Lark's Earley implementation runs on top of a skipping chart parser, which allows it to use regular expressions, instead of matching characters one-by-one. This is a huge improvement to Earley that is unique to Lark. This feature is used by default, but can also be requested explicitely using `lexer='dynamic'`. | |||

| It's possible to bypass the dynamic lexing, and use the regular Earley parser with a traditional lexer, that tokenizes as an independant first step. Doing so will provide a speed benefit, but will tokenize without using Earley's ambiguity-resolution ability. So choose this only if you know why! Activate with `lexer='standard'` | |||

| **SPPF & Ambiguity resolution** | |||

| Lark implements the Shared Packed Parse Forest data-structure for the Earley parser, in order to reduce the space and computation required to handle ambiguous grammars. | |||

| You can read more about SPPF [here](http://www.bramvandersanden.com/post/2014/06/shared-packed-parse-forest/) | |||

| As a result, Lark can efficiently parse and store every ambiguity in the grammar, when using Earley. | |||

| Lark provides the following options to combat ambiguity: | |||

| 1) Lark will choose the best derivation for you (default). Users can choose between different disambiguation strategies, and can prioritize (or demote) individual rules over others, using the rule-priority syntax. | |||

| 2) Users may choose to recieve the set of all possible parse-trees (using ambiguity='explicit'), and choose the best derivation themselves. While simple and flexible, it comes at the cost of space and performance, and so it isn't recommended for highly ambiguous grammars, or very long inputs. | |||

| 3) As an advanced feature, users may use specialized visitors to iterate the SPPF themselves. Future versions of Lark intend to improve and simplify this interface. | |||

| **dynamic_complete** | |||

| **TODO: Add documentation on dynamic_complete** | |||

| # LALR(1) | |||

| [LALR(1)](https://www.wikiwand.com/en/LALR_parser) is a very efficient, true-and-tested parsing algorithm. It's incredibly fast and requires very little memory. It can parse most programming languages (For example: Python and Java). | |||

| Lark comes with an efficient implementation that outperforms every other parsing library for Python (including PLY) | |||

| Lark extends the traditional YACC-based architecture with a *contextual lexer*, which automatically provides feedback from the parser to the lexer, making the LALR(1) algorithm stronger than ever. | |||

| The contextual lexer communicates with the parser, and uses the parser's lookahead prediction to narrow its choice of tokens. So at each point, the lexer only matches the subgroup of terminals that are legal at that parser state, instead of all of the terminals. It’s surprisingly effective at resolving common terminal collisions, and allows to parse languages that LALR(1) was previously incapable of parsing. | |||

| This is an improvement to LALR(1) that is unique to Lark. | |||

| # CYK Parser | |||

| A [CYK parser](https://www.wikiwand.com/en/CYK_algorithm) can parse any context-free grammar at O(n^3*|G|). | |||

| Its too slow to be practical for simple grammars, but it offers good performance for highly ambiguous grammars. | |||

+ 63

- 0

docs/philosophy.md

View File

| @@ -0,0 +1,63 @@ | |||

| # Philosophy | |||

| Parsers are innately complicated and confusing. They're difficult to understand, difficult to write, and difficult to use. Even experts on the subject can become baffled by the nuances of these complicated state-machines. | |||

| Lark's mission is to make the process of writing them as simple and abstract as possible, by following these design principles: | |||

| ### Design Principles | |||

| 1. Readability matters | |||

| 2. Keep the grammar clean and simple | |||

| 2. Don't force the user to decide on things that the parser can figure out on its own | |||

| 4. Usability is more important than performance | |||

| 5. Performance is still very important | |||

| 6. Follow the Zen Of Python, whenever possible and applicable | |||

| In accordance with these principles, I arrived at the following design choices: | |||

| ----------- | |||

| # Design Choices | |||

| ### 1. Separation of code and grammar | |||

| Grammars are the de-facto reference for your language, and for the structure of your parse-tree. For any non-trivial language, the conflation of code and grammar always turns out convoluted and difficult to read. | |||

| The grammars in Lark are EBNF-inspired, so they are especially easy to read & work with. | |||

| ### 2. Always build a parse-tree (unless told not to) | |||

| Trees are always simpler to work with than state-machines. | |||

| 1. Trees allow you to see the "state-machine" visually | |||

| 2. Trees allow your computation to be aware of previous and future states | |||

| 3. Trees allow you to process the parse in steps, instead of forcing you to do it all at once. | |||

| And anyway, every parse-tree can be replayed as a state-machine, so there is no loss of information. | |||

| See this answer in more detail [here](https://github.com/erezsh/lark/issues/4). | |||

| To improve performance, you can skip building the tree for LALR(1), by providing Lark with a transformer (see the [JSON example](https://github.com/erezsh/lark/blob/master/examples/json_parser.py)). | |||

| ### 3. Earley is the default | |||

| The Earley algorithm can accept *any* context-free grammar you throw at it (i.e. any grammar you can write in EBNF, it can parse). That makes it extremely friendly to beginners, who are not aware of the strange and arbitrary restrictions that LALR(1) places on its grammars. | |||

| As the users grow to understand the structure of their grammar, the scope of their target language, and their performance requirements, they may choose to switch over to LALR(1) to gain a huge performance boost, possibly at the cost of some language features. | |||

| In short, "Premature optimization is the root of all evil." | |||

| ### Other design features | |||

| - Automatically resolve terminal collisions whenever possible | |||

| - Automatically keep track of line & column numbers | |||

+ 76

- 0

docs/recipes.md

View File

| @@ -0,0 +1,76 @@ | |||

| # Recipes | |||

| A collection of recipes to use Lark and its various features | |||

| ## lexer_callbacks | |||

| Use it to interface with the lexer as it generates tokens. | |||

| Accepts a dictionary of the form | |||

| {TOKEN_TYPE: callback} | |||

| Where callback is of type `f(Token) -> Token` | |||

| It only works with the standard and contextual lexers. | |||

| ### Example 1: Replace string values with ints for INT tokens | |||

| ```python | |||

| from lark import Lark, Token | |||

| def tok_to_int(tok): | |||

| "Convert the value of `tok` from string to int, while maintaining line number & column." | |||

| # tok.type == 'INT' | |||

| return Token.new_borrow_pos(tok.type, int(tok), tok) | |||

| parser = Lark(""" | |||

| start: INT* | |||

| %import common.INT | |||

| %ignore " " | |||

| """, parser="lalr", lexer_callbacks = {'INT': tok_to_int}) | |||

| print(parser.parse('3 14 159')) | |||

| ``` | |||

| Prints out: | |||

| ```python | |||

| Tree(start, [Token(INT, 3), Token(INT, 14), Token(INT, 159)]) | |||

| ``` | |||

| ### Example 2: Collect all comments | |||

| ```python | |||

| from lark import Lark | |||

| comments = [] | |||

| parser = Lark(""" | |||

| start: INT* | |||

| COMMENT: /#.*/ | |||

| %import common (INT, WS) | |||

| %ignore COMMENT | |||

| %ignore WS | |||

| """, parser="lalr", lexer_callbacks={'COMMENT': comments.append}) | |||

| parser.parse(""" | |||

| 1 2 3 # hello | |||

| # world | |||

| 4 5 6 | |||

| """) | |||

| print(comments) | |||

| ``` | |||

| Prints out: | |||

| ```python | |||

| [Token(COMMENT, '# hello'), Token(COMMENT, '# world')] | |||

| ``` | |||

| *Note: We don't have to return a token, because comments are ignored* | |||

+ 147

- 0

docs/tree_construction.md

View File

| @@ -0,0 +1,147 @@ | |||

| # Automatic Tree Construction - Reference | |||

| Lark builds a tree automatically based on the structure of the grammar, where each rule that is matched becomes a branch (node) in the tree, and its children are its matches, in the order of matching. | |||

| For example, the rule `node: child1 child2` will create a tree node with two children. If it is matched as part of another rule (i.e. if it isn't the root), the new rule's tree node will become its parent. | |||

| Using `item+` or `item*` will result in a list of items, equivalent to writing `item item item ..`. | |||

| ### Terminals | |||

| Terminals are always values in the tree, never branches. | |||

| Lark filters out certain types of terminals by default, considering them punctuation: | |||

| - Terminals that won't appear in the tree are: | |||

| - Unnamed literals (like `"keyword"` or `"+"`) | |||

| - Terminals whose name starts with an underscore (like `_DIGIT`) | |||

| - Terminals that *will* appear in the tree are: | |||

| - Unnamed regular expressions (like `/[0-9]/`) | |||

| - Named terminals whose name starts with a letter (like `DIGIT`) | |||

| Note: Terminals composed of literals and other terminals always include the entire match without filtering any part. | |||

| **Example:** | |||

| ``` | |||

| start: PNAME pname | |||

| PNAME: "(" NAME ")" | |||

| pname: "(" NAME ")" | |||

| NAME: /\w+/ | |||

| %ignore /\s+/ | |||

| ``` | |||

| Lark will parse "(Hello) (World)" as: | |||

| start | |||

| (Hello) | |||

| pname World | |||

| Rules prefixed with `!` will retain all their literals regardless. | |||

| **Example:** | |||

| ```perl | |||

| expr: "(" expr ")" | |||

| | NAME+ | |||

| NAME: /\w+/ | |||

| %ignore " " | |||

| ``` | |||

| Lark will parse "((hello world))" as: | |||

| expr | |||

| expr | |||

| expr | |||

| "hello" | |||

| "world" | |||

| The brackets do not appear in the tree by design. The words appear because they are matched by a named terminal. | |||

| # Shaping the tree | |||

| Users can alter the automatic construction of the tree using a collection of grammar features. | |||

| * Rules whose name begins with an underscore will be inlined into their containing rule. | |||

| **Example:** | |||

| ```perl | |||

| start: "(" _greet ")" | |||

| _greet: /\w+/ /\w+/ | |||

| ``` | |||

| Lark will parse "(hello world)" as: | |||

| start | |||

| "hello" | |||

| "world" | |||

| * Rules that receive a question mark (?) at the beginning of their definition, will be inlined if they have a single child, after filtering. | |||

| **Example:** | |||

| ```ruby | |||

| start: greet greet | |||

| ?greet: "(" /\w+/ ")" | |||

| | /\w+/ /\w+/ | |||

| ``` | |||

| Lark will parse "hello world (planet)" as: | |||

| start | |||

| greet | |||

| "hello" | |||

| "world" | |||

| "planet" | |||

| * Rules that begin with an exclamation mark will keep all their terminals (they won't get filtered). | |||

| ```perl | |||

| !expr: "(" expr ")" | |||

| | NAME+ | |||

| NAME: /\w+/ | |||

| %ignore " " | |||

| ``` | |||

| Will parse "((hello world))" as: | |||

| expr | |||

| ( | |||

| expr | |||

| ( | |||

| expr | |||

| hello | |||

| world | |||

| ) | |||

| ) | |||

| Using the `!` prefix is usually a "code smell", and may point to a flaw in your grammar design. | |||

| * Aliases - options in a rule can receive an alias. It will be then used as the branch name for the option, instead of the rule name. | |||

| **Example:** | |||

| ```ruby | |||

| start: greet greet | |||

| greet: "hello" | |||

| | "world" -> planet | |||

| ``` | |||

| Lark will parse "hello world" as: | |||

| start | |||

| greet | |||

| planet | |||

+ 0

- 13

doupdate.sh

View File

| @@ -1,13 +0,0 @@ | |||

| #!/bin/sh | |||

| runtime=$(TZ=UTC date +'%Y-%m-%dT%HZ') | |||

| while read repourl name c; do | |||

| baseref="gm/$runtime/$name" | |||

| mkdir -p "gm/$name" | |||

| git ls-remote "$repourl" > "gm/$name/${runtime}.refs.txt" | |||

| #dr="--dry-run" | |||

| git fetch $dr --no-tags "$repourl" +refs/tags/*:refs/tags/"$baseref/*" +refs/heads/*:refs/heads/"$baseref/*" | |||

| done <<EOF | |||

| $(python3 reponames.py < repos.txt) | |||

| EOF | |||

+ 33

- 0

examples/README.md

View File

| @@ -0,0 +1,33 @@ | |||

| # Examples for Lark | |||

| #### How to run the examples | |||

| After cloning the repo, open the terminal into the root directory of the project, and run the following: | |||

| ```bash | |||

| [lark]$ python -m examples.<name_of_example> | |||

| ``` | |||

| For example, the following will parse all the Python files in the standard library of your local installation: | |||

| ```bash | |||

| [lark]$ python -m examples.python_parser | |||

| ``` | |||

| ### Beginners | |||

| - [calc.py](calc.py) - A simple example of a REPL calculator | |||

| - [json\_parser.py](json_parser.py) - A simple JSON parser (comes with a tutorial, see docs) | |||

| - [indented\_tree.py](indented\_tree.py) - A demonstration of parsing indentation ("whitespace significant" language) | |||

| - [fruitflies.py](fruitflies.py) - A demonstration of ambiguity | |||

| - [turtle\_dsl.py](turtle_dsl.py) - Implements a LOGO-like toy language for Python's turtle, with interpreter. | |||

| - [lark\_grammar.py](lark_grammar.py) + [lark.lark](lark.lark) - A reference implementation of the Lark grammar (using LALR(1) + standard lexer) | |||

| ### Advanced | |||

| - [error\_reporting\_lalr.py](error_reporting_lalr.py) - A demonstration of example-driven error reporting with the LALR parser | |||

| - [python\_parser.py](python_parser.py) - A fully-working Python 2 & 3 parser (but not production ready yet!) | |||

| - [conf\_lalr.py](conf_lalr.py) - Demonstrates the power of LALR's contextual lexer on a toy configuration language | |||

| - [conf\_earley.py](conf_earley.py) - Demonstrates the power of Earley's dynamic lexer on a toy configuration language | |||

| - [custom\_lexer.py](custom_lexer.py) - Demonstrates using a custom lexer to parse a non-textual stream of data | |||

| - [reconstruct\_json.py](reconstruct_json.py) - Demonstrates the experimental text-reconstruction feature | |||

+ 0

- 0

examples/__init__.py

View File

+ 75

- 0

examples/calc.py

View File

| @@ -0,0 +1,75 @@ | |||

| # | |||

| # This example shows how to write a basic calculator with variables. | |||

| # | |||

| from lark import Lark, Transformer, v_args | |||

| try: | |||

| input = raw_input # For Python2 compatibility | |||

| except NameError: | |||

| pass | |||

| calc_grammar = """ | |||